Pitt’s eButton prototype now determines portion sizes based on shapes

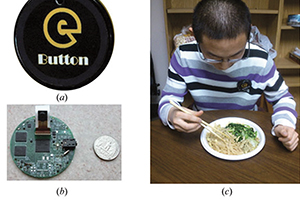

A wearable, picture-taking health monitor created by University of Pittsburgh researchers has received a recent facelift. Now, in addition to documenting what a person eats, the eButton prototype can accurately match those images against a geometric-shape library, providing a much easier method for counting calories.

Published in  Measurement Science and Technology, the Pitt study demonstrates a new computational tool that has been added to the eButton—a device that fastens to the shirt like a pin. Using its newly built comprehensive food-shape library, the eButton can now extract food from 2D and 3D images and, using a camera coordinate system, evaluate that food based on shape, color, and size.

Measurement Science and Technology, the Pitt study demonstrates a new computational tool that has been added to the eButton—a device that fastens to the shirt like a pin. Using its newly built comprehensive food-shape library, the eButton can now extract food from 2D and 3D images and, using a camera coordinate system, evaluate that food based on shape, color, and size.

“Human memory of past eating is imperfect,” said Mingui Sun, lead investigator and Pitt professor of neurosurgery and bioengineering and professor of electrical and computer engineering. “Visually gauging the size of a food based on an imaginary measurement unit is very subjective, and some individuals don’t want to track what they consume. We’re trying to remove the guess work from the dieting process.”

eButton—which is built with a low-power central processing unit, a random-access memory communication interface, and an Android operating system—now includes a library of foods with nine common shapes: cuboid, wedge, cylinder, sphere, top and bottom half spheres, ellipse, half ellipse, and tunnel. The device snaps a series of photos while a person is eating, and its new formula goes to work: removing the background image, zeroing in on the food, and measuring its volume by projecting and fitting the selected 3D shape to the 2D photograph using a series of mathematical equations.

The Pitt team tested their new design on 17 popular favorites like jelly, broccoli, hamburgers, and peanut butter. Using a Logitech webcam, they captured five high-resolution images at different locations on diners’ plates. Likewise, they applied the eButton to real-world dining scenarios in which diners were asked to wear the eButton on their chests, recording their eating. For each image, the eButton’s new configuration method was implemented to automatically estimate the food portion size after the background was removed. To account for eaters leaving food behind, the Pitt team analyzed the last photograph taken during a meal. This leftover food was estimated and subtracted from the original portion size, as documented by earlier photographs.

“For food items with reasonable shapes, we found that this new method had an average error of only 3.69 percent,” said Sun. “This error is much lower than that made by visual estimations, which results in an average error of about 20 percent.”

While the eButton is still not available commercially, Sun hopes to get it on the market soon. He and his team are now fine-tuning the device, working toward improving the accuracy of detecting portion sizes for irregularly shaped foods.

Other collaborators include, from Pitt, Professor of Psychiatry John Fernstrom, Research Assistant Professor of Neurological Surgery Wenyan Jia, former Pitt Postdoctoral Research Fellow Hsin-Chen Chen, and Yaofeng Yue, a Pitt graduate student. Also involved were National Cheng Kung University Professor of Computer Science Yung-Nien Sun in Taiwan, and Zhaoxin Li, a graduate student from Harbin Institute of Technology in China who is visiting Sun’s laboratory.

Other Stories From This Issue

On the Freedom Road

Follow a group of Pitt students on the Returning to the Roots of Civil Rights bus tour, a nine-day, 2,300-mile journey crisscrossing five states.

Day 1: The Awakening

Day 2: Deep Impressions

Day 3: Music, Montgomery, and More

Day 4: Looking Back, Looking Forward

Day 5: Learning to Remember

Day 6: The Mountaintop

Day 7: Slavery and Beyond

Day 8: Lessons to Bring Home

Day 9: Final Lessons